Coursera - Computer Science: Programming With A Purpose

Week 4: Input And Output - Shannon Entropy

Write a program ShannonEntropy.java that takes a command-line integer m; reads a sequence of integers between 1 and m from standard input; and prints the Shannon entropy to standard output, with 4 digits after the decimal point. The Shannon entropy of a sequence of integers is given by the formula:

H = -(p1 log2 p1 + p2 log2 p2 + … + pm log2 pm)

where pi denotes the proportion of integers whose value is i. If pi = 0, then treat pi log2 pi as 0.

~/Desktop/io> javac ShannonEntropy.java

~/Desktop/io> cat fair-coin.txt

1 1 1 1 2 1 2 1 1 2

2 2 2 2 1 2 1 2 2 1

~/Desktop/io> java ShannonEntropy 2 < fair-coin.txt

1.0000

~/Desktop/io> cat loaded-die.txt

3 2 6 2 4 3 2 1 2 2 1 3 2 3 2 2

~/Desktop/io> java ShannonEntropy 6 < loaded-die.txt

1.8750

~/Desktop/io> java DiscreteDistribution 1000000 80 20 | java-introcs ShannonEntropy 2

0.7221

~/Desktop/io> java DiscreteDistribution 1000000 80 20 | java-introcs ShannonEntropy 2

0.7217

Step-by-step calculation. Consider the following sequence of 16 integers generated from a loaded die.

3 2 6 2 4 3 2 1 2 2 1 3 2 3 2 2

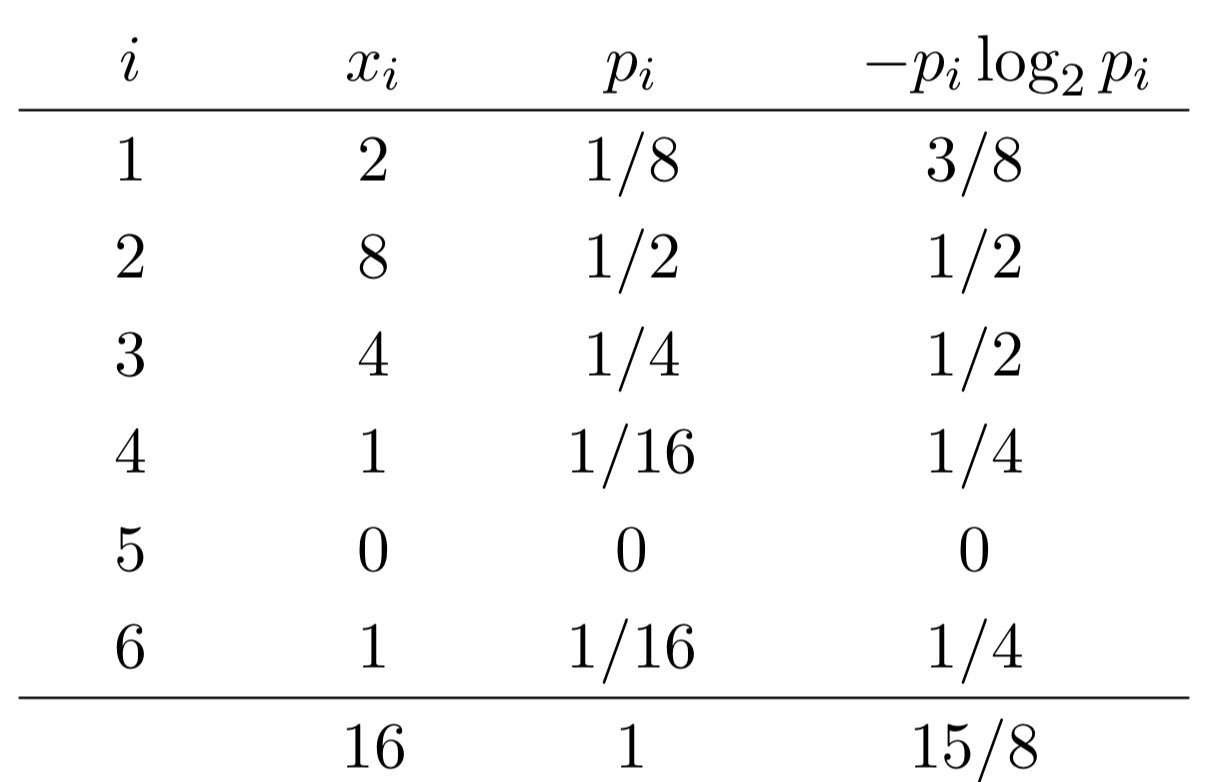

This table shows the frequencies xi, the proportions pi, and the −pi log2 pi terms:

The Shannon entropy is 1.875 = 15/8.

The Shannon entropy is a measure of the rate of information produced by a random source, such as the outcomes of flipping a fair coin or rolling a loaded die. It is a fundamental concept in information theory and data compression.

Note: the above description is copied from Coursera and converted to markdown for convenience

Solution:

public class ShannonEntropy {

public static void main(String[] args) {

final int m = Integer.parseInt(args[0]);

int count = 0;

final int[] proportions = new int[m];

while (!StdIn.isEmpty()) {

int number = StdIn.readInt();

proportions[number - 1]++;

count++;

}

double h = 0.0;

double p = 0.0;

for (int i = 0; i < proportions.length; i++) {

if (proportions[i] != 0) {

p = (proportions[i] / (double) count);

// log2N = log10(N)/log10(2)

final double a = Math.log10(p);

final double b = Math.log10(2);

final double pi = -(p * (a / b));

h += pi;

}

}

StdOut.printf("%.4f", h);

}

}

Link To: Java Source Code